Sentiment detection is one of the simplest, most accurate and powerful AI tools available to researchers, in particular to our ability to conduct qualitative research at scale.

We use sentiment in three ways:

- To look at the sentiment of an entire comment – Overall when reading a sentence does it express a positive sentiment or a negative one?

- To analyse the sentiment of specific words (entities) within a sentence. Say in this example someone uses the word ‘father’ in a sentence, were they embuing that word with positivity or negativity?

- When asking questions to express empathy or probing. For example when someone says something negative, our bot can respond with ‘I am sorry to hear that, can you tell me a bit more about that?’

We have used this combination of AI tools across a number of studies and as the example in this video demonstrates, it’s an extremely accurate tool. In this video we demonstrate how we’ve combined entity, sentiment and magnitude to understand how people responded spontaneously to an ad. Our method was to play an ad through the chat bot. Once the ad plays we ask ‘what thoughts and feelings went through your mind as you watched that?’

Don’t worry, we of course go on to ask a range of further questions, but this initial question is extremly powerful at helping to predict how effective an ad is.

This example is from a McDonald’s ad.

By getting an average of the overall sentiment to all these responses, (in this case .38 on a scale from negative -1 to +1) we can see that overall responses to this were more positive than negative. In addition we overlay magnitude (.75 or 75%) we can see that responses were very positive on average.

Here are some examples of comments with the highest sentiment score:

- My loving family 0.9

- Happy ad 0.9

- It is a good story 0.9

- It’s a lovely ad, it was enjoyable to watch 0.9

- i liked the ad it had a good story line 0.9

- Fun to watch 0.9

And the lowest sentiment scores:

- Boring pass on it -0.9

- Very corny nonsense -0.9

- made me shudder with revulsion -0.9

Unlike many other AI tools that are available, sentiment and entity rarely get it wrong, and when they do, it tends to be when someone uses sarcasm and/or spelling erros. This response has both and yet the sentiment score -.5. feels about right:

- What the hell was it about? just another bank add, no wait it turned out just as bad if not worse, that bloody McDonalds -0.5

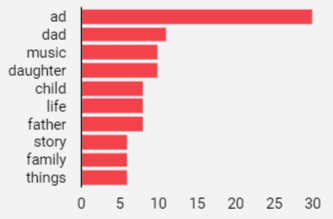

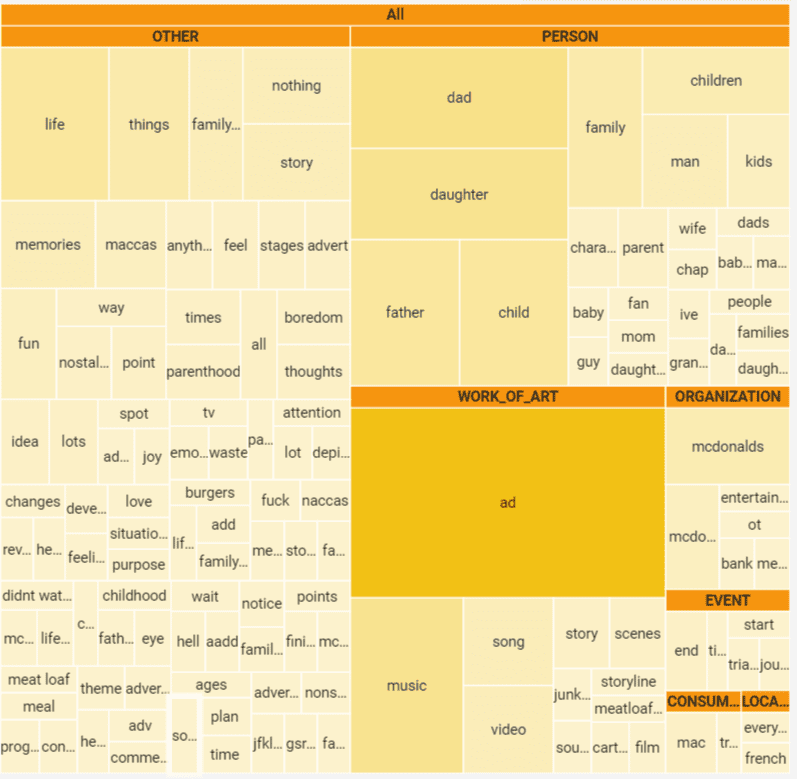

If this is all we did, I think we would have an extremely useful metric. But we go further. Next we overlay entitities – the themes within the text grouped into things like people, places, works of art, locations and other ‘things’. We can extract the key entities from responses to this question like this:

But we can also look them in groups like this:

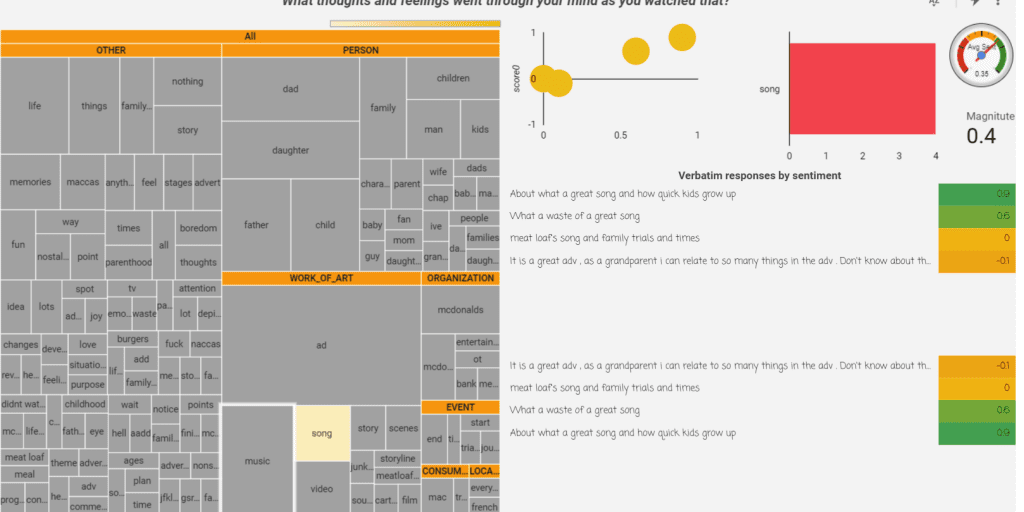

And then, we look at the sentiment behind each of these, when I click on the ‘work of art’ groups I can see that sentiment jumps up from its average of .29 to .43! And if I click on any one named sentiment, like ‘song’ it goes to .35 and I can read the comments that relate to the song:

In addition to capturing people’s top of mind responses via this question we also use a separate tool to help us understand its emotional impact – using expression analysis captured via camera. We will cover this in our next post.

You can learn more about our ad test here.